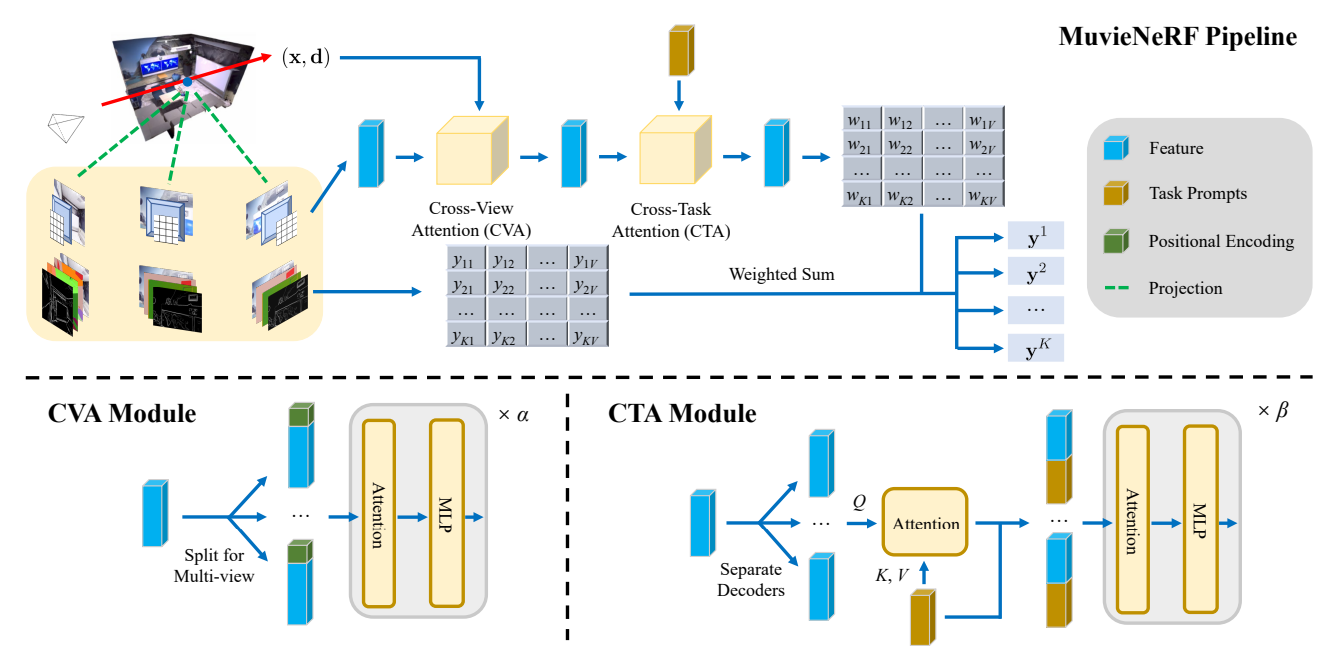

MuvieNeRF Pipeline

Multi-task visual learning is a critical aspect of computer vision. Current research predominantly concentrates on the multi-task dense prediction setting, which overlooks the intrinsic 3D world and its multi-view consistent structures, and lacks the capacity for versatile imagination.

To address these limitations, we present a novel problem setting -- multi-task view synthesis (MTVS), which reinterprets multi-task prediction as a set of novel-view synthesis tasks for multiple scene properties, including RGB. To tackle the MTVS problem, we propose MuvieNeRF, a framework that incorporates both multi-task and cross-view knowledge to simultaneously synthesize multiple scene properties. \modelname integrates two key modules, the Cross-Task Attention (CTA) and Cross-View Attention (CVA) modules, enabling the efficient use of information across multiple views and tasks.

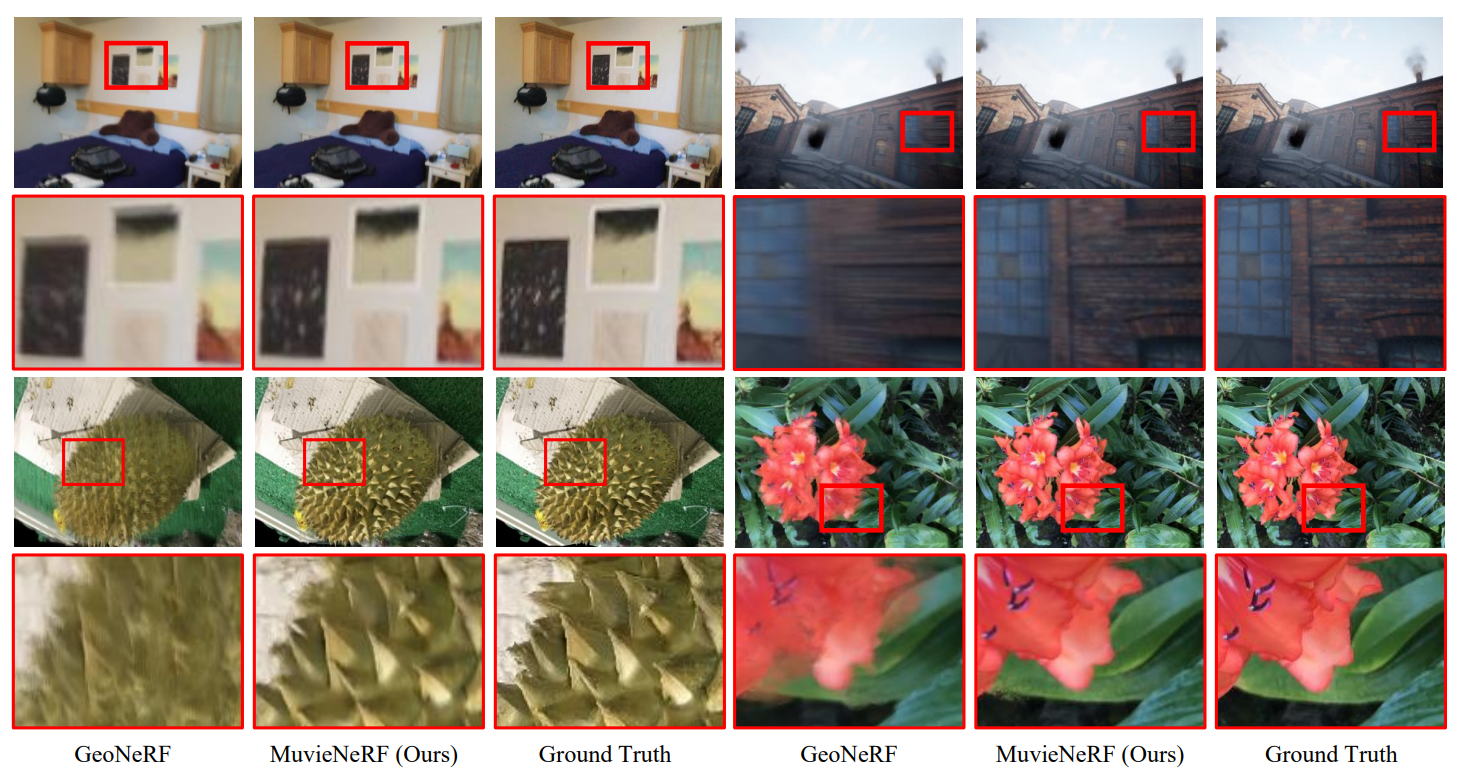

Extensive evaluations on both synthetic and realistic benchmarks demonstrate that MuvieNeRF is capable of simultaneously synthesizing different scene properties with promising visual quality, even outperforming conventional discriminative models in various settings. Notably, we show that MuvieNeRF exhibits universal applicability across a range of NeRF backbones.

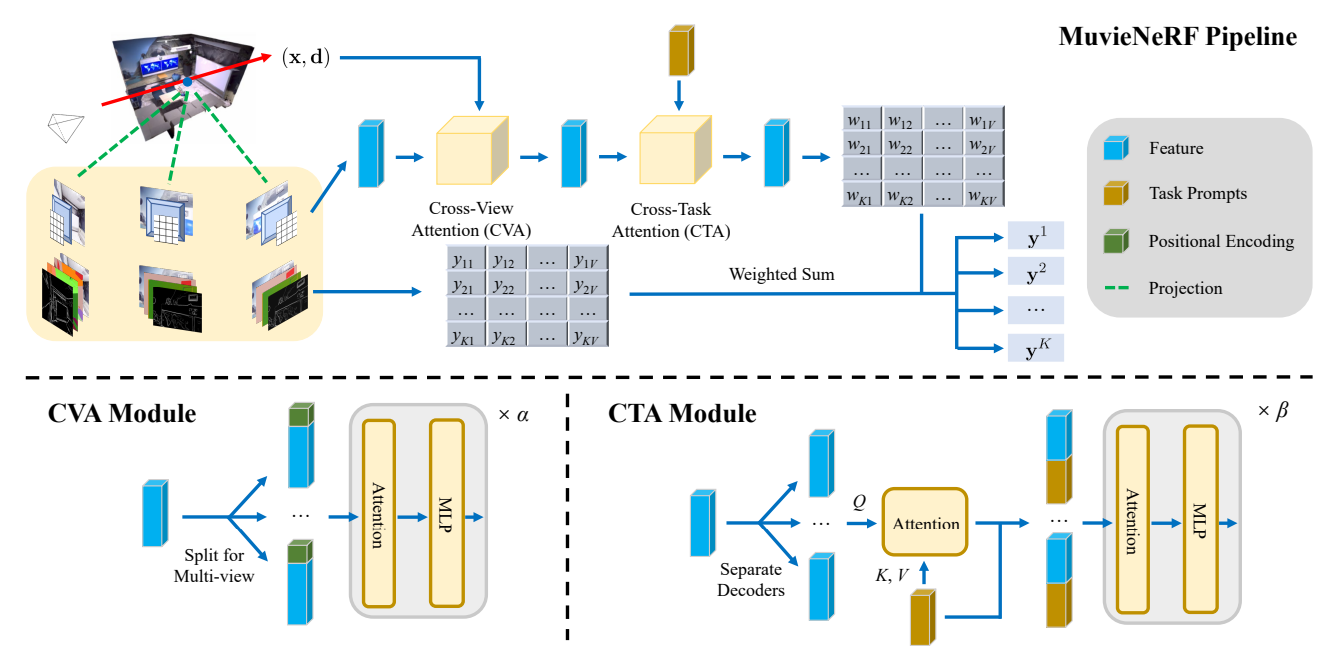

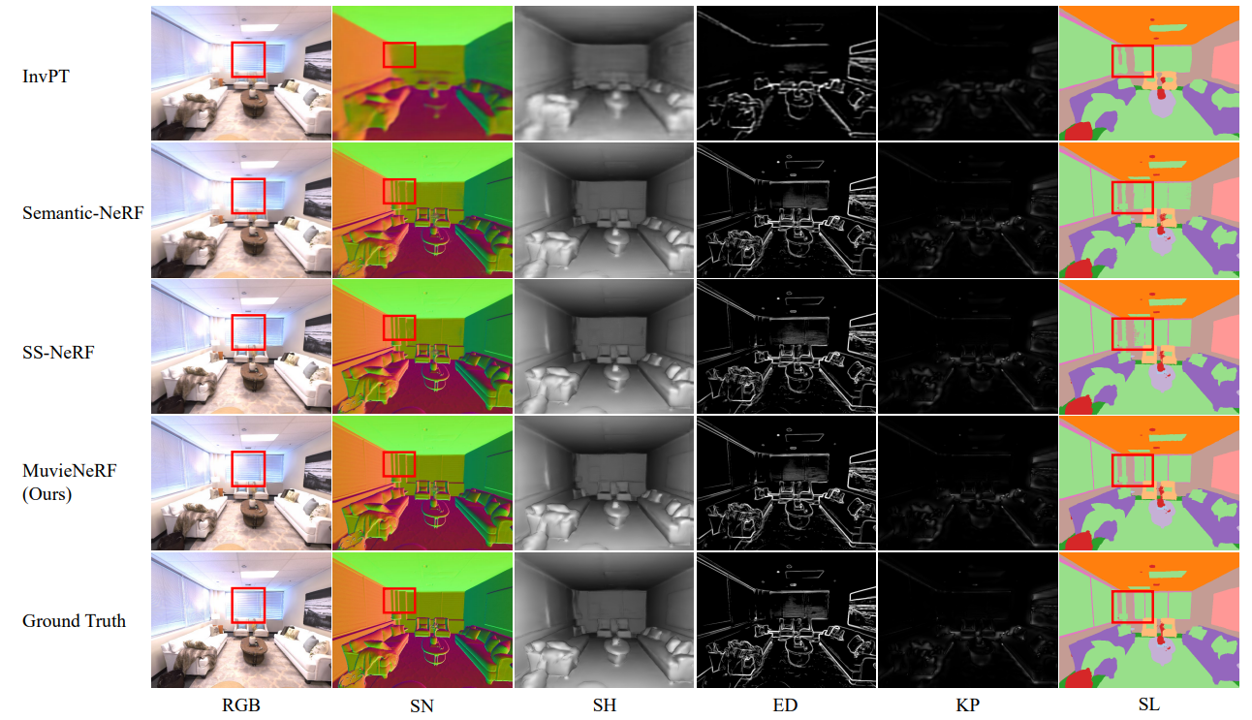

We conduct experiments on two datasets: Replica and SceneNet RGB-D. Five tasks beyond RGB are chosen: surface normal prediction (SN), shading prediction (SH), edge detection (ED), keypoint detection (KP) and semantic label prediction (SL). Baseline methods include the state-of-the-art discriminative model InvPT, single-task NeRF model Semantic-NeRF and naive multi-task NeRF model SS-NeRF.

Qualitative comparison on Replica dataset:

Qualitative comparison on SceneNet RGB-D dataset:

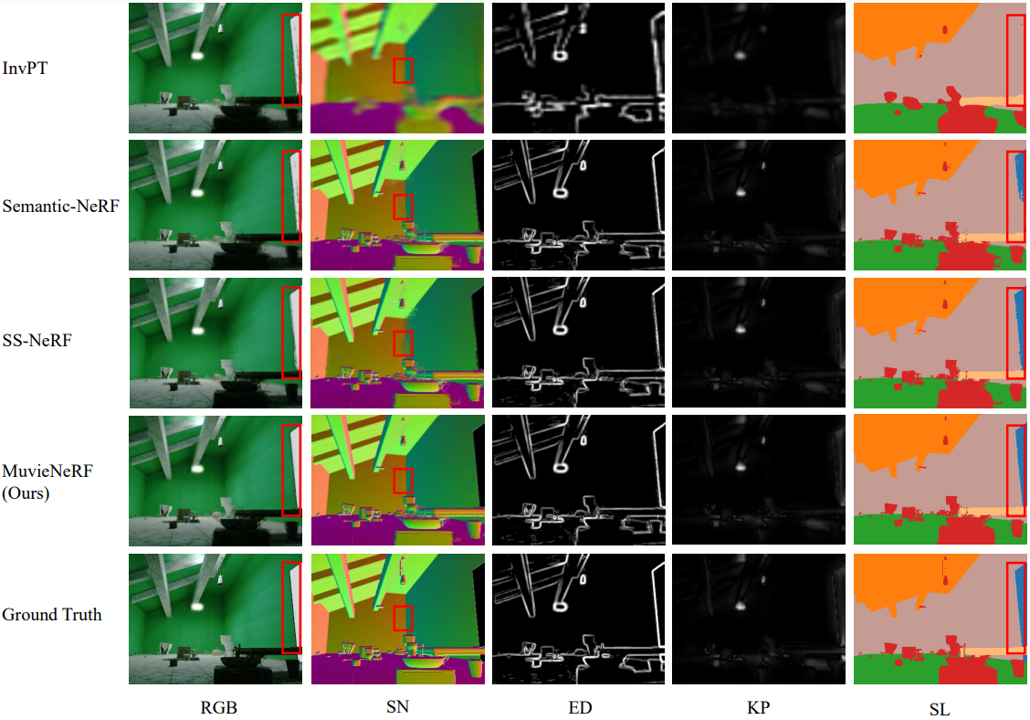

The knowledge of multi-task synergy learned during training benefits generalization on out-of-distribution datasets, boosting the performance of novel view RGB synthesis, even when 2D task signals from input views are unavailable.

Four out-of-distribution datasets ScanNet, TartanAir, BlendedMVS, LLFF (from left to right, top to bottom) are evaluated:

@inproceedings{zheng2023mtvs,

title={Multi-task View Synthesis with Neural Radiance Fields},

author={Zheng, Shuhong and Bao, Zhipeng and Hebert, Martial and Wang, Yu-Xiong},

booktitle={ICCV},

year={2023}

}